How to Write an Actionable Alert

Writing a powerful detection is great, but if your SOC/IR team can't act upon it, how useful is it really? This article will serve as a guide on how to write an actionable alert.

Intro

“If a tree falls in a forest and no one is around to hear it, does it make a sound?”

This mantra about possibly non-existent trees very much applies to detection engineers. If you write a great rule to detect malicious behavior, but no one (or nothing) acts upon the alert, did you really catch the threat actor? Or worse, an analyst acts on the alert but responds incorrectly. In this article, I will cover the importance of not only writing solid detections, but also solid alerts. The target audience of this blog post is security professionals who write custom detections in their SIEM or other similar system.

Definitions

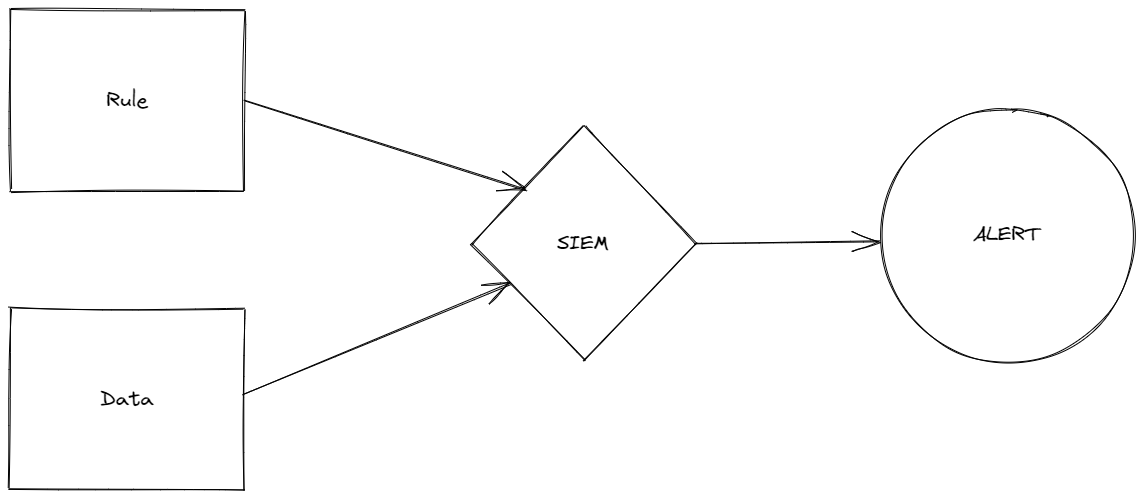

Before we get much further, I’d like to clarify how I define a rule as opposed to an alert. In my lexicon, a rule (or query) is the construct by which one searches for a behavior (often malicious) within a data set. This rule can be streaming or scheduled. The alert, on the other hand, is the output of that rule. A rule and data will enter a SIEM and the output is an alert generating a ticket or case in a downstream system.

Writing a Good Rule

There’s been a number of great blog posts and articles that talk about how to write a good rule. I’ll try to briefly summarize a few of the pieces that inspired me. (If you’re generally unfamiliar with detection engineering, I highly suggest you read these articles as it will help your understanding with the rest of this article.)

Pyramid of Pain

I think any article on this topic would be remiss if it didn’t start by referencing the Pyramid of Pain. This piece by David Bianco has really pushed detection engineering forward to where detection engineers now strive to move beyond the most basic indicators and towards the top of the pyramid - TTPs.

Funnel of Fidelity

Next up is one of my favorite pieces on the Funnel of Fidelity. This series of pieces covers the straining of data to find the pieces that accurately represent the behavior you’re looking for. In general, I recommend all of SpecterOps and Jared Atkinson’s pieces. They’re well worth a read.

Detection Development Lifecycle

It can never hurt to sprinkle in some personal bias. I think the Detection Lifecycle that we utilize at Snowflake is a pretty good place to start when it comes to learning about building solid detections.

What Makes a Good Alert

So now that we know what makes a good rule, let’s dive into what makes a good alert. In my experience, there are a few qualities that make an alert good.

-

Immediately human actionable. If you as a detection engineer are going to present an alert, the analyst who’s responding to it should have the tools and information they need to immediately make a decision and take an action.

-

Automatically enriched. If the first step an analyst needs to take when triaging an alert is to collect additional data or information, I consider this a failure.

-

Well prioritized. When presented with a slew of alerts to work, the analyst should be able to clearly identify which is the most important to work first and why.

-

Grouped and correlated. If multiple different alerts trigger for a system or user, they should be grouped and correlated so the analyst(s) working the case doesn’t miss that multiple related alerts exist.

Let’s dive into how to achieve these properties.

Human Actionable

In my opinion, one of the most important properties a good alert should have is that it needs to be “human” actionable. For this to be true, a human needs to be able to review the content of the alert, know what their next steps should be, and have the tools necessary to take those next steps. A key qualifier in this section is “human” actionable. A good alert should require a human to make a decision as the next step in the response process. This differs from “machine” actionable where the next steps could be automated or executed by code. Let’s take a look at a bad alert that does not demonstrate this property.

Title: Login from New Device

User: jane.doe@snowflake.com

Details: Identified user logged in from a new device. IPs were 123.34.12.11.

Timestamps: 14:33 UTC

Playbook: Reach out to user and confirm if the login was from them. If not,

proceed to reset their password.

Let’s assume that the rule itself is accurate and the login was from a new device. What’s wrong with this alert? Well the first step is to reach out to the user. This step is automatable and does not require a human to take the next step. When you’re designing your alert, ask yourself, “Do we require the intelligence and ingenuity of our human analysts to make a decision or act on this information?” If the answer is no, then more should be done to improve the alert.

On the subject of automation, I personally believe that any automation that is designed to improve the fidelity or the quality of information of an alert should be done by detection engineers. Incident response automation should focus on response and remediation.

There’s a term I use with alert analysis that I call “friction”. Friction is anything that slows down analysis in an unhelpful way. For example, having to go to another system to look something up, not knowing what playbook to use, a swarm of false positives, etc. All of these add friction to the alert triage process slowing it down and increasing the possibility of errors. As a threat detection engineer, you should strive to reduce friction when designing your alerts.

So what does a good alert look like?

Title: Phishing Reported by User

User: john.doe@snowflake.com

Details: The attached email was reported as phishing by the user. The email

has been removed from the user's inboxes. Similar emails were found in

the following user's inboxes and have been temporarily quarantined:

jane.doe@snowflake.com, bob.human@snowflake.com, lee.user@snowflake.com.

Information on the attachment and URLs in the email is attached to the ticket.

Timestamps: 14:33 UTC

Playbook: Review the attached phishing emails and analysis. To remove the similar

emails from the associated user's inboxes, click here <link>. To release from

quarantine back into the users' inbox, click here <link>.

Attachments: <simulated analysis>

This alert does a few things right: first, it has automated analysis of the phishing email and attached it to the ticket. This reduces the friction an analyst experiences when working a ticket. Second, it’s clear what has already been done: the reported email has been removed from the user’s inbox. Third, it presents a call to action: the system has identified similar emails and requires human analysis to determine what the next steps should be. Finally, it makes it easy to take the next steps by presenting links that trigger a playbook. When designing alerts, make sure to consider that you should reduce analysis friction and make it easy to take the next steps.

Automatically Enriched

This property is highly related to the one above, and is arguably a subset; however, I believe it’s important enough where I’ve decided to specifically call it out. This property can be summed up succinctly by answering the following question: Does the human analyst need to go into another system to gather additional information? If the answer is yes, you can improve the alert.

With that in mind, let’s revisit the example above.

Title: Phishing Reported by User

User: john.doe@snowflake.com

Timestamps: 14:33 UTC

When an analyst gets an alert about phishing, it’s your job as a detection engineer to understand what questions the SOC/IR will have and work to answer as many of those in the alert as you can. (The best way to know what questions they’ll ask is to go talk to them.) In this case, some common questions when triaging phishing are:

- Did the user engage with the phish? Did they respond, click a link, or download anything?

- Who else was targeted?

- What do we know about the phishing links/attachments/campaign?

By knowing what questions the analyst will have, we can enrich our alerts (with whatever SIEM/SOAR you’re using) to reduce analyst friction.

Well Prioritized

Doing good work is easy. You just have to do the right things in the right order. With the previous example, we’ve helped the SOC/IR do the right things by providing them the tools they need to execute. The next part we need to help with is alert prioritization. Most SOCs have some sort of SLA when it comes to alerts that varies by severity. A basic example could be

| Critical | High | Medium | Low | |

|---|---|---|---|---|

| SLA | Pageable | 1 hour | 12 hours | 24 hours |

If you write the most accurate alert for someone running whoami on a laptop and you set it as a critical paging your IR at 3 AM, you’re going to have some very unhappy responders. As a detection engineer, you can’t forget that you have a customer and that’s the individuals responding to your alerts. It is of utmost importance that you equip them with the information they need to make the correct decisions quickly. And that means helping them prioritize their work.

When it comes to severity, I’d recommend that your team decide on a framework on how it’s set. The goal is that if two different engineers write the same rule, they would end up with the same severity. Some guiding questions could be:

- Does this signal mean customer data has been compromised?

- What is our confidence that this alert is always going to be a true positive?

- Does this signal affect the integrity/availability of administrative credentials/permissions?

Ideally, you want to keep your framework to a limited number of questions. You don’t want your detection engineers to feel like they’re going through an audit every time severity is being set.

Grouped and Correlated

One of the last properties that I think is critical for writing good alerts is grouping and correlation. I’ll also associate deduplication with this category. Let me start by defining what I mean by these properties:

- Grouping/correlation: the ability to determine that two separate alerts are related

- Deduplication: the ability to determine that two or more separate alerts are for the same event and to group them into one

Let’s start off with deduplication by providing some examples. Let’s say we have an alert for the root user in an AWS account having a failed login. In this example, a penetration test is running and attempts to brute force the account 10,000 times. Without deduplication, IR would receive 10,000 separate tickets/cases. However, if we’re doing alert deduplication properly, they would receive 1 ticket with information that the alert triggered 10,000 times.

Depending on your SIEM, it is often the responsibility of the detection engineer to decide how alerts are deduplicated. For instance, Panther offers deduplication as part of its Python functionality. As a detection engineer, you can solve for deduplication by asking yourself the following questions: what key properties identify a unique instance of this event? What do I want to show IR if the same event fires multiple times? At Snowflake, we often structure our alerts as “Actor” did “Action” to “Object”. Our default deduplication says if the “actor” and “action” or “actor” and “object” are the same for a particular alert, then deduplicate. To illustrate with an example, let’s say we have an alert for an action having permission denied in AWS. We say our “actor” is the IAM user, the “action” is the AWS API, and the “object” is the request parameters. Let’s say a particular user triggers the alert on “DeleteObject” 10,000 times across 10,000 different buckets. Because the user and API action are the same, these would be deduplicated and the 10,000 events would be presented in one ticket. Similarly, if a user did a number of reconnaissance actions (let’s say GetObject, GetObjectACL, etc) against a particular bucket, these would also be grouped. That being said, as a detection engineer, you should feel free to be flexible with deduplication and choose the properties that work best for your detection.

A similar property is alert grouping/correlation. Let’s revisit the above example about permission denied for an AWS user. Let’s say we also have a detection for multiple failed logins for an AWS user. In this example, both of these alerts trigger about 3 hours apart (with permission denied occurring second) in the middle of changeover for our SOC. Without alert correlation/grouping, the analyst working the second alert may be unaware that there was previously an alert for multiple failed logins for the same user. However, if our system is able to group these alerts and show them together, then the analyst has the context they need to make smart response decisions. This capability is also a critical first step to successfully implementing Risk Based Alerting for your SOC.

Making it Happen

Much of what I discussed in this article centers on correlation and enrichment. At Snowflake, we accomplish this through our use of Snowflake as a security data lake. By ingesting all of our enterprise data into Snowflake, we’re able to execute complex correlation and enrichment queries as part of our detection pipeline. Haider and I did a talk on this and the detection lifecylce in our talk Unlocking the Magic to High-Fidelity Alerts if you want to learn more. For the areas where we can’t accomplish this for one reason or other, we rely on Tines to execute further automation.

Conclusion

I think this piece can best be summarized by remembering that if alerts are not properly triaged, then the detections are not effective. And you, as a detection engineer, have many tools to empower analysts to make the timely and correct decisions.